I finally got around to a) updating my old WordPress-installation (after finding out spam-bots had already created custom folders on my installation) and b) uploading all my public code to bitbucket (as Mercurial repositories).

That includes my wavelet image compression library, its Mac OS X previewing GUI as well as WowPlot (including some fairly decent Objective-C WoWCombatLog.txt parsing). I converted most of those repositories from darcs (which in the case of my wavelet lib took about 3h to convert from darcs1 to darcs2 format), but on first glance they look alright.

Category Archives: wavelet

Wavelet 3.4.0

A slightly bigger release, which brings two major changes. Not compatible with older files due to the the reorder-changes. The improvements to bit.c are not terribly well tested. More here, as usual. As an aside, Kompressor is now served in a ZIP-archive, instead of a DMG…

Changelog

- Overhauled the reordering-code to make the table used independent of the aspect-ratio of the image. This makes old images incompatible with this version of the code. The smallest dimension (in wv_create_reorder_table) is now relevant for the largest table entry. Any image whose smallest dimension is smaller than the one used to create the table originally can safely use it.

- Added a “min bits” criterion to the scheduler, that reserves a certain amount of bits for certain channels. Perceived image quality has improved a fair amount, the same default values are used in Kompressor and main.c.

- We can now pass a write buffer into bit_open(), added bit_free() for deallocating automatically allocated regions. Only accepts lower-case mode-strings now.

- Fixed (and simplified) scheduler preparations for very large absolute target errors.

It’s wavelet bugfix time — 3.3.4 is here!

While compressing a multi-channel file with a target bitrate and no specific target MSEs the resulting bit distribution between the channels seemed rather odd, and comparing the results to an older version revealed that it was indeed totally bogus!

So I changed the target MSE computation in main.c to be more inline with what happens in Kompressor.app, which revealed a another bug where tiny negative (i.e. relative) target MSEs passed to wv_query_scheduler() / wv_encode() were converted to 0 (instead of the smallest negative fixed point number representable) and thus interpreted as absolute target MSEs.

Both of these are fixed in 3.3.4 (and Kompressor.app has also been recompiled with the relevant fix).

Other than those two fixes (both of which only relate to target MSE evaluation when compressing), the code is identical to (and thus fully compatible with) version 3.3.3.

Darcs Repository

Thanks to PHPDarcsView (which is very useful if you are on a hosted server where you are unable to run darcs itself) you can now browse the Wavelet-repository online; the official documentation and Kompressor.app are still here.

Kompressor.app and Wavelet Image Compression Library 3.3.3

Shortly before the year is out (and as result of my vacation), there is some fresh software to be had… 🙂

I’ve now written an Mac OS 10.4 application called Kompressor.app to compress, inspect and display WKO images. This release goes hand in hand with version 3.3.3 of the wavelet image compression library itself.

Because this is the first (semi-)proper Mac-application I’ve written, I would welcome any form of testing or feedback people can provide, especially on the user-interface side. The application is universal and thus should work on PPC and Intel Macs.

Here’s a bit (all of it actually) of the supplied online help to get started… Continue reading

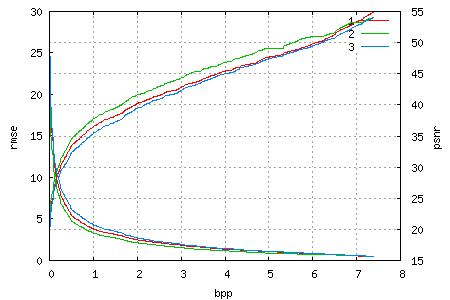

Rate-Distortion Graph

I’ve invested a bit of time in getting some nice rate-distortion graphs out of my wavelet image compression library. Now that it’s embedded, the process is fairly easy: Compress once into a single file and then decompress only enough bits from the file until the desired rate is achieved. As such, the graph also shows the quality over the progress of the file.

Here is an example of one such graph, for a 708×1024 image of Mena Suvari (which unfortunately comes from a JPEG source and thus has block noise), as the Lena image (notice the name similarity? ;)) is past its prime IMO, especially the color version has plenty of noise in the blue channel. The image was encoded in the YCoCg colour-space, which explains the slightly higher quality of the green channel and why even the highest rate is not lossless. Compared to the RGB version, the YCoCg representation incurs a mean-square error of ~0.25 (which results in the root mean-square error of 0.5 shown).

In theory, the rate/distortion graph should have a negative, but monotonically increasing derivative (which in normal language means something like “bigger improvements are closer to the beginning of the file”). We try achieve this by scheduling data units (which are essentially bitplanes of blocks) by how much they reduce the error in the coded image for each bit of their size. There are two reasons for this “close-but-not-quite-monotonically-increasing-slope”. One is that the error (distortion) is not computed exactly; instead I use an approximiation that based on the wavelet transform used. The other is the fact that we deal with packets / data units / blocks of finite size, and as soon as more than one channel is written to a single file, it becomes evident that a single packet will only improve a single channel – which in turn means the other channels have a stagnating improvement (zero derivative) for the size (or duration) of that packet.

These plots also make it easier to spot differences (usually either improvements or regressions) for changes in the code, so I’ve created graphs for all my test images, so that I can make these comparisons more easily in the future.

I’ve also taken an old pre-3.1 version (thank you, darcs!) that still used the recursive quantizer selection to produce similar plots (taking much longer as the image had to be compressed anew for each rate) and the result was pretty much a draw, in spite of the embedded version having to store a bit more sideband data (number of bitplanes for each block in the header, and which block is coded next in the bitstream itself). All of which makes the embedded version the “better choice”, due to its other advantages such as simpler code and “compress once, decompress at any rate”.

Wavelet 3.2

As I’ve taken a two-day vacation pre-easter, I’ve gotten some more work done on my Wavelet Image Compression Library (and not played games as some of my colleagues were led to believe ;)).

Before this version all the subbands of an octave (i.e. HD, VD, and DD coefficients) were written as a single block in an interleaved manner. The changes I’ve made give each subband its own block, which increases the number of blocks, and thus allows for better and more fine-grained scheduling (which increases compression performance). Unfortunately, this led to a three times slower error estimation, which I then managed to cunningly avoid by computing the estimate for 3 blocks at a time.

Other changes include a more consistent behaviour for the -bpp command line switch, bug fixes to the scheduling where my accumulated fixed-point error estimates would overflow, and quite a few other things.

A experimental change was an exact error estimation, which I’ve then removed again as the code became unreadable, it was slow as hell, and didn’t actually help that much.

There is now a Darcs repository here (don’t try browsing there) from which you can get the current version and against which you can send me patches. You can also read my totally unfunny changelog entries if you need more information on the changes between versions.